可观测-金年会app官方网

在日常开发中的日志相关的实践

1、zap日志库

2、中间件客户端日志接口的实现

3、efk日志,日志收集与展示

4、k8s下多pod,日志收集与展示

5、报警

1、zap日志

日志库是项目开始首先就要确定的库,zap可以说是goland中使用的最广泛的库,这是一个我的配置

package zaplog

import (

"github.com/luxun9527/zaplog/report"

"github.com/mitchellh/mapstructure"

"go.uber.org/zap"

"go.uber.org/zap/zapcore"

"gopkg.in/natefinch/lumberjack.v2"

"log"

"os"

"reflect"

"time"

)

const (

// _defaultbuffersize specifies the default size used by buffer.

_defaultbuffersize = 256 * 1024 // 256 kb

// _defaultflushinterval specifies the default flush interval for

// buffer.

_defaultflushinterval = 30 * time.second

)

const (

_file = "file"

_console = "console"

)

type config struct {

name string `json:",optional" mapstructure:"name"`

//日志级别 debug info warn panic

level zap.atomiclevel `json:"level" mapstructure:"level"`

//在error级别的时候 是否显示堆栈

stacktrace bool `json:",default=true" mapstructure:"stacktrace"`

//添加调用者信息

addcaller bool `json:",default=true" mapstructure:"addcaller"`

//调用链,往上多少级 ,在一些中间件,对日志有包装,可以通过这个选项指定。

callership int `json:",default=3" mapstructure:"callership"`

//输出到哪里标准输出console,还是文件file

mode string `json:",default=console" mapstructure:"mode"`

//文件名称加路径

filename string `json:",optional" mapstructure:"filename"`

//error级别的日志输入到不同的地方,默认console 输出到标准错误输出,file可以指定文件

errorfilename string `json:",optional" mapstructure:"errorfilename"`

// 日志文件大小 单位mb 默认500mb

maxsize int `json:",optional" mapstructure:"maxsize"`

//日志保留天数

maxage int `json:",optional" mapstructure:"maxage"`

//日志最大保留的个数

maxbackup int `json:",optional" mapstructure:"maxbackup"`

//异步日志 日志将先输入到内存到,定时批量落盘。如果设置这个值,要保证在程序退出的时候调用sync(),在开发阶段不用设置为true。

async bool `json:",optional" mapstructure:"async"`

//是否输出json格式

json bool `json:",optional" mapstructure:"json"`

//是否日志压缩

compress bool `json:",optional" mapstructure:"compress"`

// file 模式是否输出到控制台

console bool `json:"console" mapstructure:"console"`

// 非json格式,是否加上颜色。

color bool `json:",default=true" mapstructure:"color"`

//是否report

isreport bool `json:",optional" mapstructure:"isreport"`

//report配置

reportconfig report.reportconfig `json:",optional" mapstructure:"reportconfig"`

options []zap.option

}

func (lc *config) updatelevel(level zapcore.level) {

lc.level.setlevel(level)

}

func (lc *config) build() *zap.logger {

if lc.mode != _file && lc.mode != _console {

log.panicln("mode must be console or file")

}

if lc.mode == _file && lc.filename == "" {

log.panicln("file mode, but file name is empty")

}

var (

ws zapcore.writesyncer

errorws zapcore.writesyncer

encoder zapcore.encoder

)

encoderconfig := zapcore.encoderconfig{

//当存储的格式为json的时候这些作为可以key

messagekey: "message",

levelkey: "level",

timekey: "time",

namekey: "logger",

callerkey: "caller",

stacktracekey: "stacktrace",

lineending: zapcore.defaultlineending,

//以上字段输出的格式

encodelevel: zapcore.lowercaselevelencoder,

encodetime: customtimeencoder,

encodeduration: zapcore.secondsdurationencoder,

encodecaller: zapcore.fullcallerencoder,

}

if lc.mode == _console {

ws = zapcore.lock(os.stdout)

} else {

normalconfig := &lumberjack.logger{

filename: lc.filename,

maxsize: lc.maxsize,

maxage: lc.maxage,

maxbackups: lc.maxbackup,

localtime: true,

compress: lc.compress,

}

if lc.errorfilename != "" {

errorconfig := &lumberjack.logger{

filename: lc.errorfilename,

maxsize: lc.maxsize,

maxage: lc.maxage,

maxbackups: lc.maxbackup,

localtime: true,

compress: lc.compress,

}

errorws = zapcore.lock(zapcore.addsync(errorconfig))

}

ws = zapcore.lock(zapcore.addsync(normalconfig))

}

//是否加上颜色。

if lc.color && !lc.json {

encoderconfig.encodelevel = zapcore.capitalcolorlevelencoder

}

encoder = zapcore.newconsoleencoder(encoderconfig)

if lc.json {

encoder = zapcore.newjsonencoder(encoderconfig)

}

if lc.async {

ws = &zapcore.bufferedwritesyncer{

ws: ws,

size: _defaultbuffersize,

flushinterval: _defaultflushinterval,

}

if errorws != nil {

errorws = &zapcore.bufferedwritesyncer{

ws: errorws,

size: _defaultbuffersize,

flushinterval: _defaultflushinterval,

}

}

}

var c = []zapcore.core{zapcore.newcore(encoder, ws, lc.level)}

if errorws != nil {

highcore := zapcore.newcore(encoder, errorws, zapcore.errorlevel)

c = append(c, highcore)

}

//文件模式同时输出到控制台

if lc.mode == _file && lc.console {

consolews := zapcore.newcore(encoder, zapcore.lock(os.stdout), zapcore.errorlevel)

c = append(c, consolews)

}

if lc.isreport {

//上报的格式一律json

if !lc.json {

encoderconfig.encodelevel = zapcore.lowercaselevelencoder

encoder = zapcore.newjsonencoder(encoderconfig)

}

//指定级别的日志上报。

highcore := zapcore.newcore(encoder, report.newreportwriterbuffer(lc.reportconfig), lc.reportconfig.level)

c = append(c, highcore)

}

core := zapcore.newtee(c...)

logger := zap.new(core)

//是否新增调用者信息

if lc.addcaller {

lc.options = append(lc.options, zap.addcaller())

if lc.callership != 0 {

lc.options = append(lc.options, zap.addcallerskip(lc.callership))

}

}

//当错误时是否添加堆栈信息

if lc.stacktrace {

//在error级别以上添加堆栈

lc.options = append(lc.options, zap.addstacktrace(zap.errorlevel))

}

if lc.name != "" {

logger = logger.with(zap.string("project", lc.name))

}

return logger.withoptions(lc.options...)

}

func customtimeencoder(t time.time, enc zapcore.primitivearrayencoder) {

enc.appendstring(t.format("2006-01-02-15:04:05"))

}

// stringtologlevelhookfunc viper的string转zapcore.level

func stringtologlevelhookfunc() mapstructure.decodehookfunc {

return func(

f reflect.type,

t reflect.type,

data interface{}) (interface{}, error) {

if f.kind() != reflect.string {

return data, nil

}

atomiclevel, err := zap.parseatomiclevel(data.(string))

if err != nil {

return data, nil

}

// convert it by parsing

return atomiclevel, nil

}

}json格式,推荐测试,生产阶段使用,对后续的分析更加友好

{"level":"debug","time":"2024-08-31-22:26:39","caller":"e:/openproject/zaplog/zap_config_test.go:43","message":"test level debug","project":"test"}

{"level":"info","time":"2024-08-31-22:26:39","caller":"e:/openproject/zaplog/zap_config_test.go:44","message":"info level info","project":"test"}

{"level":"warn","time":"2024-08-31-22:26:39","caller":"e:/openproject/zaplog/zap_config_test.go:45","message":"warn level warn","project":"test"}

{"level":"error","time":"2024-08-31-22:26:39","caller":"e:/openproject/zaplog/zap_config_test.go:46","message":"error level error","project":"test"}

{"level":"panic","time":"2024-08-31-22:26:39","caller":"e:/openproject/zaplog/zap_config_test.go:47","message":"panic level panic","project":"test","stacktrace":"github.com/luxun9527/zaplog.testconsolejson\n\te:/openproject/zaplog/zap_config_test.go:47\ntesting.trunner\n\te:/goroot/src/testing/testing.go:1689"}

--- fail: testconsolejson (0.00s)

panic: panic level panic [recovered]

panic: panic level panic非json格式。推荐开发阶段使用。可读性比较好。

2024-08-31-22:26:07 debug e:/openproject/zaplog/zap_config_test.go:43 test level debug {"project": "test"}

2024-08-31-22:26:07 info e:/openproject/zaplog/zap_config_test.go:44 info level info {"project": "test"}

2024-08-31-22:26:07 warn e:/openproject/zaplog/zap_config_test.go:45 warn level warn {"project": "test"}

2024-08-31-22:26:07 error e:/openproject/zaplog/zap_config_test.go:46 error level error {"project": "test"}

2024-08-31-22:26:07 panic e:/openproject/zaplog/zap_config_test.go:47 panic level panic {"project": "test"}

github.com/luxun9527/zaplog.testconsolejson

e:/openproject/zaplog/zap_config_test.go:47

testing.trunner

e:/goroot/src/testing/testing.go:1689

--- fail: testconsolejson (0.00s)

panic: panic level panic [recovered]

panic: panic level panic2、go中间件日志

在日常开发中,有一些日志不是并不是我们输出的,比如,一些中间件的sdk提供输出的日志。

如es,gorm,kafka,但是这样sdk都提供了自定义的日志输出接口。开发阶段首先要去确定封装好。

gorm

示例es

/*

elastic.seterrorlog(eserrorlog), // 启用错误日志

elastic.setinfolog(esinfolog), // 启用信息日志

*/

var (

erroreslogger = &eslogger{

logger: logger.with(zap.string("module", esmodulekey)).sugar(),

level: zapcore.errorlevel,

}

infoeslogger = &eslogger{

logger: logger.with(zap.string("module", esmodulekey)).sugar(),

level: zapcore.errorlevel,

}

)

type eslogger struct {

logger *zap.sugaredlogger

level zapcore.level

}

func (eslog *eslogger) printf(format string, v ...interface{}) {

if eslog.level == zapcore.infolevel {

eslog.logger.infof(format, v...)

} else {

eslog.logger.errorf(format, v...)

}

}3、efk日志收集与展示

efk安装

e :elasticsearch 日志的存储。

f: filebeat 日志收集,从日志文件中收集。

k: kibana 日志的展示。

filebeat配置。

# 可参考 https://www.elastic.co/guide/en/beats/filebeat/7.14/filebeat-reference-yml.html

# 收集系统日志

filebeat:

inputs:

- type: log

enabled: true

paths:

- /usr/share/filebeat/my-log/demo*.log

tags: [ "es" ]

# json 解析

json.keys_under_root: true # 将解析出的键值对直接放到根对象下

json.add_error_key: true # 如果解析出错,增加一个包含错误信息的字段

json.message_key: "message" # 如果 json 对象中有一个字段包含日志的消息内容,可以指定这个字段的名称

# multiline:

# pattern: '^\{' # 匹配以 `{` 开头和 `}` 结尾的 json 行

# negate: false # 如果不匹配,将该行与上一行合并 negate 控制 filebeat 是将匹配到的行还是未匹配到的行作为多行日志的合并依据。true为不匹配的行,false为匹配的行

# match: after # 将不匹配的行合并到前一行

processors:

- drop_fields:

fields: ["host", "ecs", "log", "prospector", "agent", "input", "beat", "offset"]

ignore_missing: true

# 输出到elasticsearch

output.elasticsearch:

hosts: [ "elasticsearch:9200" ] # es地址,可以有多个,用逗号","隔开

username: "elastic" # es用户名

password: "123456" # es密码

indices:

- index: "%{[project]}-%{ yyyy.mm.dd}" # 使用日志中的字段名

when.contains:

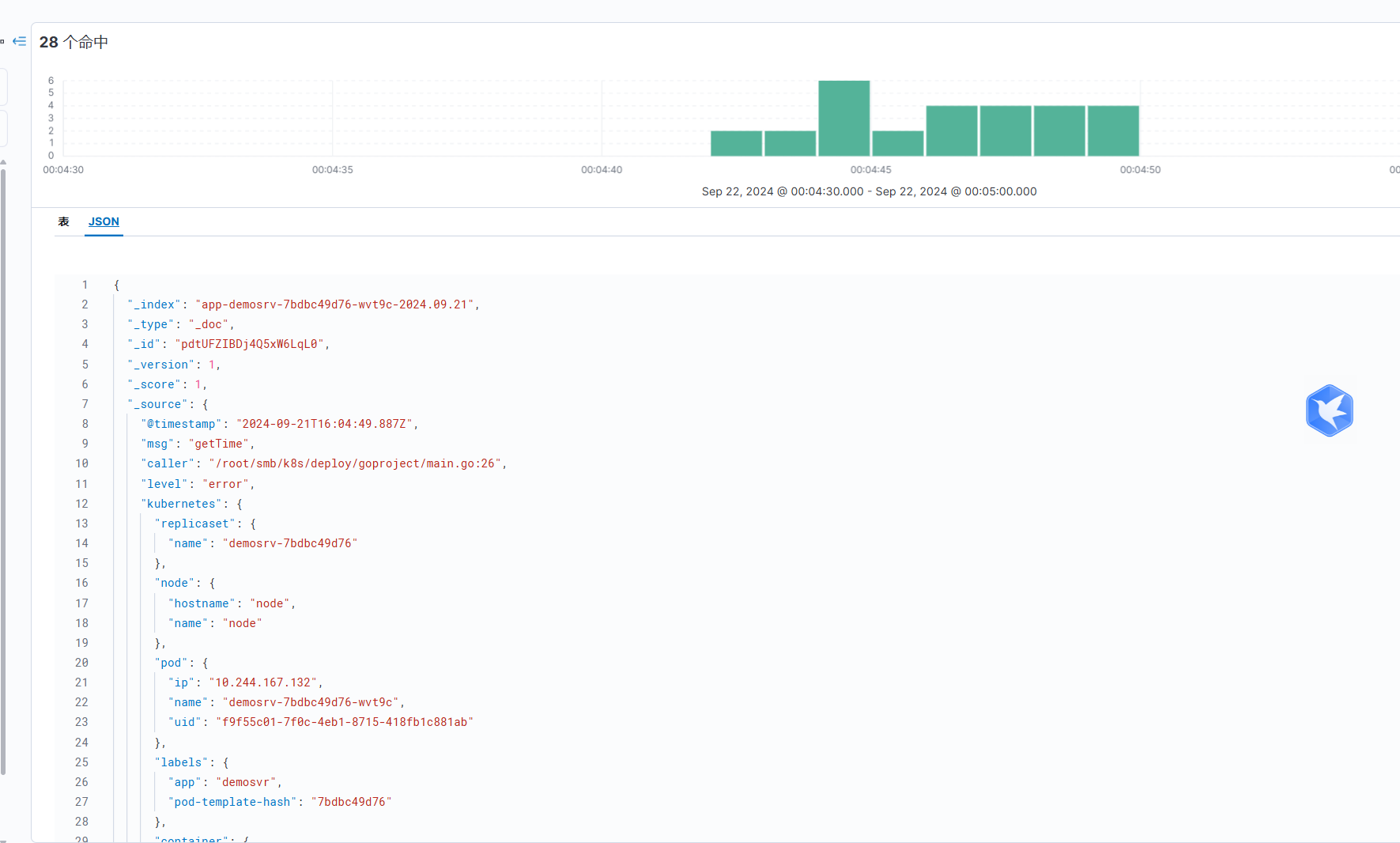

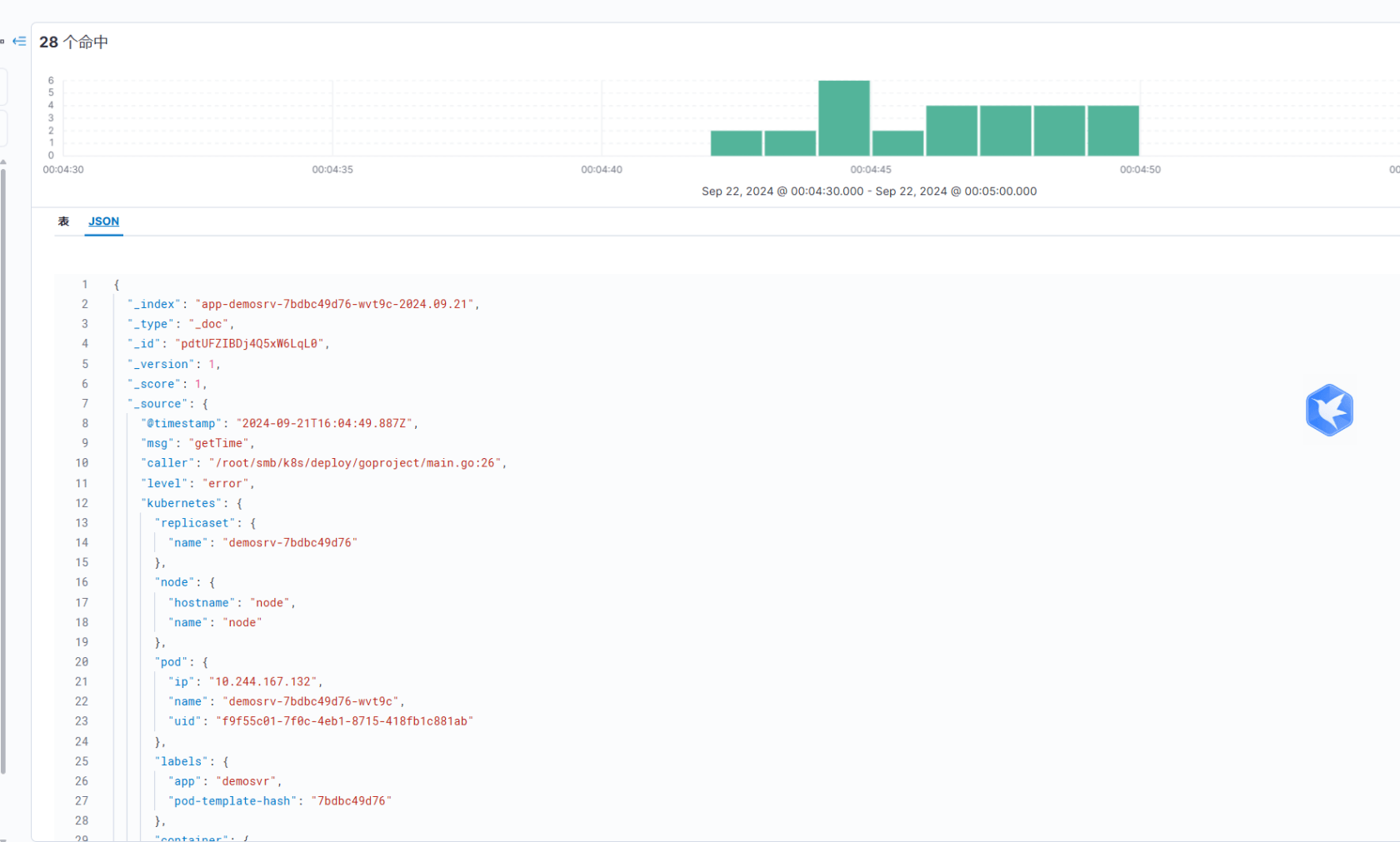

tags: "es"使用docker-compose安装后直接就可以看到了,这个是在kibana中看到的效果。

4、k8s下多pod,日志收集与展示

前置:

k8s中pod的日志在/var/log/containers/目录下

k8s中可以通过helm安装efk

参考。

安装es

1、拉取helm

helm repo add elastic https://helm.elastic.co

helm pull elastic/elasticsearch --version 7.17.1pull之后解压可以看到es默认是用使用statefulset headless部署的,作为一个简单示例,只安装一个单节点,且并不配置https证书。

2、创建一个secret存储账号和密码

能有效减少密码暴露的风险。

kubectl create secret generic elastic-credentials \

--from-literal=username=elastic --from-literal=password=admin1233、创建pv

es需要一个30gi的pv, 也是简单创建一个磁盘存储。

apiversion: "v1"

kind: "persistentvolume"

metadata:

name: "es-0"

spec:

capacity:

storage: "50gi"

accessmodes:

- "readwriteonce"

persistentvolumereclaimpolicy: retain

hostpath:

path: /root/smb/k8s/es-04、自定义helm配置

---

## 设置集群名称

clustername: "elasticsearch"

## 设置节点名称

nodegroup: "master"

## 这个节点的角色,可以是数据节点同时也是master节点

roles:

master: "true"

ingest: "true"

data: "true"

remote_cluster_client: "true"

ml: "true"

## 指定镜像与镜像版本,现在国内很多镜像源都无法使用了。

image: "docker.1panel.dev/library/elasticsearch"

imagetag: "7.17.1"

imagepullpolicy: "ifnotpresent"

## 副本数

replicas: 1

## jvm 配置参数

esjavaopts: "-xmx1g -xms1g"

## 部署资源配置(生成环境一定要设置大些)

resources:

requests:

cpu: "1000m"

memory: "2gi"

limits:

cpu: "1000m"

memory: "2gi"

## 数据持久卷配置

persistence:

enabled: true

volumeclaimtemplate:

accessmodes: [ "readwriteonce" ]

resources:

requests:

storage: 30gi

# ============安全配置============

#单机版是yellow

clusterhealthcheckparams: "wait_for_status=yellow&timeout=1s"

#挂载前面的secret

secretmounts:

- name: elastic-certificates

secretname: elastic-certificates

path: /usr/share/elasticsearch/config/certs

protocol: http

esconfig:

elasticsearch.yml: |

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.authc.api_key.enabled: true

service:

enabled: true

labels: {}

labelsheadless: {}

type: nodeport

# consider that all endpoints are considered "ready" even if the pods themselves are not

# https://kubernetes.io/docs/reference/kubernetes-api/service-resources/service-v1/#servicespec

publishnotreadyaddresses: false

nodeport: "32003"

annotations: {}

httpportname: http

transportportname: transport

loadbalancerip: ""

loadbalancersourceranges: []

externaltrafficpolicy: ""

extraenvs:

- name: elastic_username

valuefrom:

secretkeyref:

name: elastic-credentials

key: username

- name: elastic_password

valuefrom:

secretkeyref:

name: elastic-credentials

key: password

# ============调度配置============

## 设置调度策略

## - hard:只有当有足够的节点时 pod 才会被调度,并且它们永远不会出现在同一个节点上

## - soft:尽最大努力调度

antiaffinity: "hard"

## 容忍配置(一般 kubernetes master 或其它设置污点的节点,只有指定容忍才能进行调度,如果测试环境只有三个节点,则可以开启在 master 节点安装应用)

#tolerations:

# - operator: "exists" ##容忍全部污点执行命令安装。

helm install es elasticsearch -f ./extra.yaml安装kibana

类似上面的操作,先下载helm包。

helm pull elastic/kibana --version 7.17.1然后指定一个我们自定义的extra.yaml

---

## 指定镜像与镜像版本

image: "docker.1panel.dev/library/kibana"

imagetag: "7.17.1"

## 配置 集群内elasticsearch 地址

elasticsearchhosts: "http://elasticsearch-master-0.elasticsearch-master-headless.default:9200"

# ============环境变量配置============

## 环境变量配置,这里引入上面设置的用户名、密码 secret 文件

extraenvs:

- name: elasticsearch_username

valuefrom:

secretkeyref:

name: elastic-credentials

key: username

- name: elasticsearch_password

valuefrom:

secretkeyref:

name: elastic-credentials

key: password

# ============资源配置============

resources:

requests:

cpu: "1000m"

memory: "2gi"

limits:

cpu: "1000m"

memory: "2gi"

# ============配置 kibana 参数============

kibanaconfig:

kibana.yml: |

i18n.locale: "zh-cn"

# ============service 配置============

service:

type: nodeport

nodeport: "30601"kibana 是用deploy的方式部署的。

helm install kibana kibana -f ./extra.yaml安装filebeat

helm pull elastic/filebeat --version 7.17.1

image: "registry.cn-hangzhou.aliyuncs.com/zhengqing/filebeat"

imagetag: "7.14.1"

daemonset:

filebeatconfig:

filebeat.yml: |

logging.level: debug

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- script:

lang: javascript

id: clean_log_field

source: >

function process(event) {

var message = event.get("message");

}

- decode_json_fields: #解析json

fields: ["message"]

add_error_key: true

target: "" # 解析后的字段将放在根目录下

- add_kubernetes_metadata:

#添加k8s描述字段

default_indexers.enabled: true

default_matchers.enabled: true

host: ${node_name}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- drop_event:

when:

not:

equals:

kubernetes.namespace: "app" # 只收集指定命名空间下的日志。

- drop_fields:

#删除的多余字段

fields: ["host", "tags", "ecs", "log", "prospector", "agent","input", "beat", "offset","message","container","stream","container","kubernetes.namespace_labels","kubernetes.node.labels","kubernetes.node.uid","kubernetes.deployment","kubernetes.namespace_uid"]

ignore_missing: true

output.elasticsearch:

host: '${node_name}'

hosts: 'http://elasticsearch-master-0.elasticsearch-master-headless.default:9200'

username: "elastic" # es用户名

password: "admin123" # es密码

indices: # 自动创建索引,使用命名空间名 pod名字 时间的格式。

- index: "%{[kubernetes.namespace]}-%{[kubernetes.pod.name]}-%{ yyyy.mm.dd}" # 自定义索引格式

# ============资源配置============

resources:

requests:

cpu: "1000m"

memory: "2gi"

limits:

cpu: "1000m"

memory: "2gi"helm install filebeat filebeat -f ./extra.yaml最终的效果。

5、告警

指定的日志级别告警。将告警信息发送到办公im中

elastic通过办公im的webhook报警这个功能好像是收费的。推荐在zap日志中新增一个core来实现告警功能。具体可以参考我的实现 您的评论,star都是对我的鼓励。

本作品采用《cc 协议》,转载必须注明作者和本文链接

推荐文章: